Plugin: Automated Evaluation Tokenizer

Plugin provides the Tokenization of both Target and Reference text in RBX files.

Note

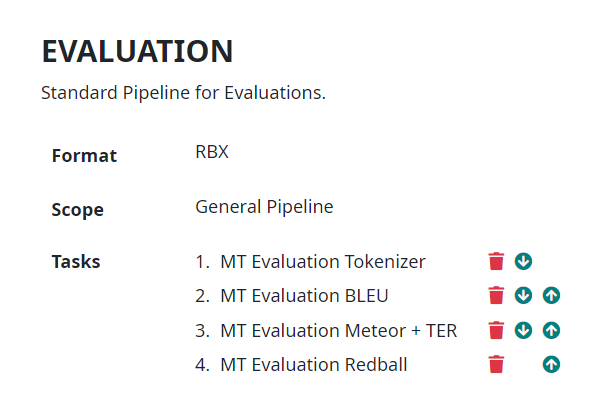

Evaluation plugins positioning

Tokenizer works together with other automated Evaluation plugins (BLEU, Meteor + TER and/or Redball) to provide all automated evaluation features.

The Automated Evaluation Tokenizer plugin provides tokenization – a crucial preparation step for all evaluations – along with the calculation of Meteor, TER, chrF and BLEU scores. In turn, the Automated Evaluation Redball plugin provides the segment-level graphical representation of the differences between the target and reference translations.

For access to all automated evaluation features, the recommended order of tasks in automated evaluation pipeline is:

Automated Evaluation Tokenizer

Automated Evaluation BLEU

Automated Evaluation Meteor

Automated Evaluation Redball + Levenshtein

Automated Evaluation chrF

Automated Evaluation TER

Advanced users may choose to create pipelines that contains any combination of automated evaluation plugins, but the inclusion of the Automated Evaluation Tokenizer plugin is required for all automated evaluations and must be positioned as a first plugin in the pipeline.

Class: LTGear.Flowme.Plugin.Eval.Tokenizer

Pipeline Format: RBX (Evaluation)

Parameters: None