Automated Evaluation (EVAL)

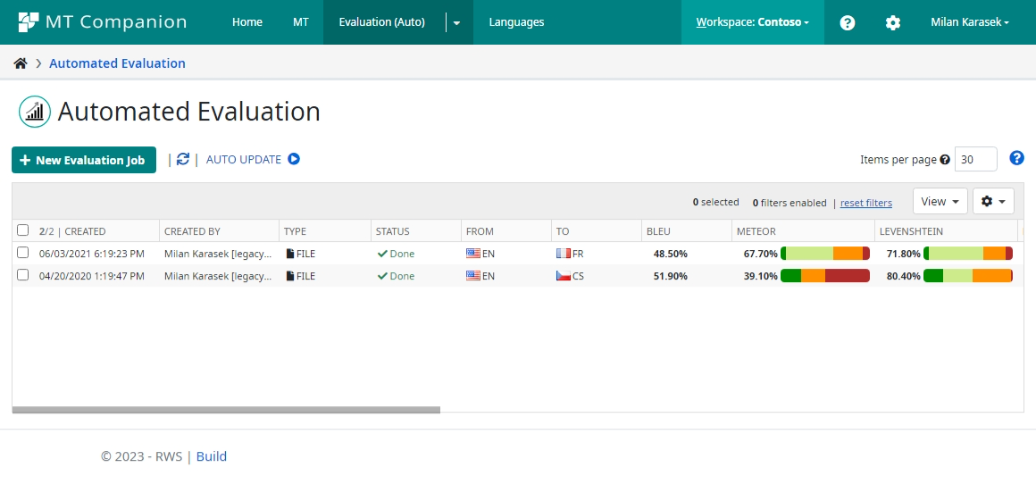

One of the main features of Companion is to provide Machine Translation (MT) Evaluation. In the Evaluation (Auto) section of the Home page, users can create a New Evaluation Job for automated evaluation as well as display a list of previously executed automated evaluation jobs, together with their results and details.

Job list displayed on the page has following columns:

COLUMN NAME |

DESCRIPTION |

|---|---|

JobID |

Unique job identifier |

Created |

Creation date of the job |

Created By |

Name of the user who created the job |

Status |

Status of execution (Done, Failed, or In-progress). The Status field also indicates whether the job is ON-LINE (executed via synchronous call, e.g., via the Evaluate API endpoint) OR FILE (for Evaluate file processing). |

Configuration Alias (CONFIG) |

Alias of the evaluation Configuration used, as specified in Configurations (e.g., CTNS_EVAL) |

MT Config. Alias (MTCONFIG) |

Alias of the MT Configuration. Used in case the user wants to Perform an evaluation associated with a particular MT Configuration. |

From |

Code of the source language or culture |

To |

Code of the target language or culture |

BLEU |

Resulting BLEU Score with category distribution chart |

Meteor |

Resulting Meteor Score with category distribution chart |

Levenshtein |

Resulting Levenshtein Score with category distribution chart |

RedBall |

Resulting RedBall Score with category distribution chart |

TER |

Resulting TER Score with category distribution chart |

Total Segments |

Total number of segments in the input data set |

Valid Segments |

Number of valid segments (used in the evaluation) in the data set |

Invalid Segments |

Number of invalid segments(excluded from the evaluation) in the data set |

Total Words (Target) |

Total word count of the target segments |

Valid Words (Target) |

Word count of valid target segments (used in the evaluation) |

Invalid Words (Target) |

Word count of invalid target segments (excluded from the evaluation) |

Total Words (Reference) |

Total word count of the reference segments |

Valid Words (Reference) |

Word count of valid reference segments (used in the evaluation) |

Invalid Words (Reference) |

Word count of invalid reference segments (excluded from the evaluation) |

Total Words (Source) |

Total word count of the source segments |

Valid Words (Source) |

Word count of valid source segments (used in the evaluation) |

Invalid Words (Source) |

Word count of invalid source segments (excluded from the evaluation) |

Valid Characters (Target) |

Total character count of the target segments |

Valid Characters (Reference) |

Total character count of the reference segments |

Valid Characters (Source) |

Total character count of the source segments |

Note |

User’s note entered for job |

Status Message |

Error message in case of errors or warnings. |

Users can sort and filter data in the grid. For more details, see Job List Reference.

When one or more jobs is selected from the list, the following actions are allowed:

Show Job Info displays the job summary for jobs requested via API call or via the input form on the web, the pipeline information, including results from each task of the pipeline. Along with this information, the Job Info modal window also contains a detailed analysis for each metric. See more information in the Automated Evaluation Job Details section.

Show RBX displays the entire RBX containing all evaluation information in a structured form. This RBX is the internal file format storing all evaluation data together with configuration metadata driving the pipeline of the job.

Show segment details shows all evaluated segments with detailed information about their scores. See more information in the Automated Evaluation Segment Details section.

Download complete RBX file allows users to download the resulting RBX file.

Remove action deletes all selected jobs from the list. This action is accessible only to Companion Admins and is executable on more than one item in the grid.

To create a new automated evaluation job, click on the New Job button from the Evaluation (Auto) section of the Companion web app. A new dialog box is displayed, prompting the user to enter all necessary parameters of the job. Find more information about this dialog in New Automated Evaluation Job section.

The data in the Automated Evaluation grid are loaded when the page is loaded. In order to prevent disruptions for the user, the data on the page do not automatically refresh by default. To enable auto-refresh of the data, click the Auto update button. When Auto update is switched on, the data in the grid are refreshed every 5 seconds.

Note: The Auto update feature is useful when you are processing large files, therefore it is automatically switched on when a file is submitted for evaluation.