Comparative Assessment (CA) Job execution

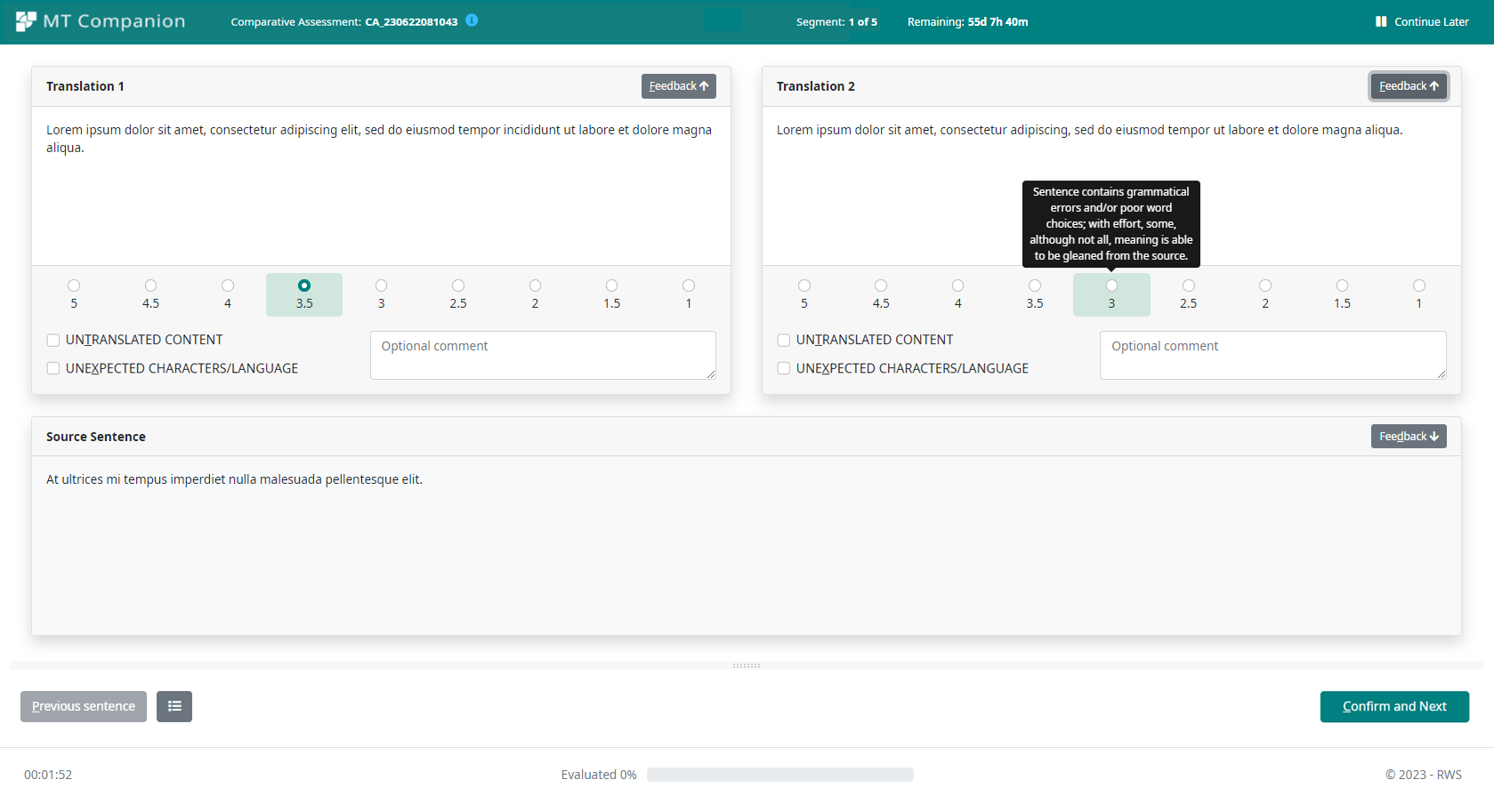

The goal of the Comparative Assessment job type is to compare the MT output from two different engines to select the more suitable engine. To perform this evaluation, the evaluator will be presented with a job view that will display Translation 1 and Translation 2 (MT outputs from two different MT models). Each MT output should be evaluated using the scoring system selected by the project manager.

In the example screenshot below, a 5-point scoring system has been selected.

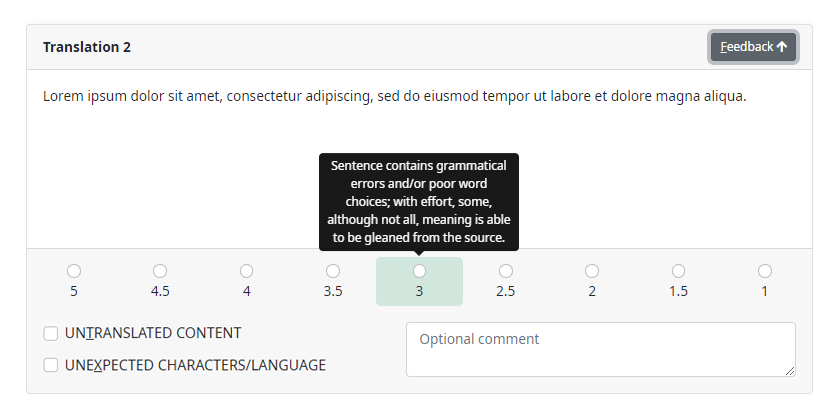

The definitions of the scoring system can be displayed by hovering over the scores, or by

selecting the list icon ![]() in the bottom left of the screen.

in the bottom left of the screen.

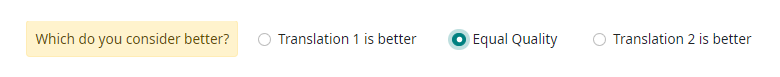

If both MT outputs are scored the same, the evaluator will be prompted to select the better translation (if possible) before proceeding.

Reporting issues for an CA Job

If there is an issue with either of the MT outputs or the Source, the evaluator can provide feedback.

For issues with MT outputs, the evaluator can tick the box indicating that the segment has UNTRANSLATED CONTENT or the text is in the wrong language (with UNEXPECTED CHARACTERS/LANGUAGE in target). If there is some other issue, the evaluator can leave a comment indicating the issue.

For issues with the Source, the evaluator can tick one of the boxes:

The Linguistically Bad Source issue indicates that the source contains segmentation issues, spelling issues, or grammar issues.

The Offensive Source issue indicates that the source text may cause offense or discomfort to the reader.

The Specialist Source issue indicates that the source is domain-specific and therefore cannot be validated by a non-specialist.

If there is some other issue, the evaluator can leave a comment indicating the issue.